After the migration of an InfluxDB setup to Google Kubernetes Engine (GKE) we saw a lot of timeout errors in the logs of ingress-nginx ressource.

upstream timed out (110: Connection timed out) while reading response header from upstream

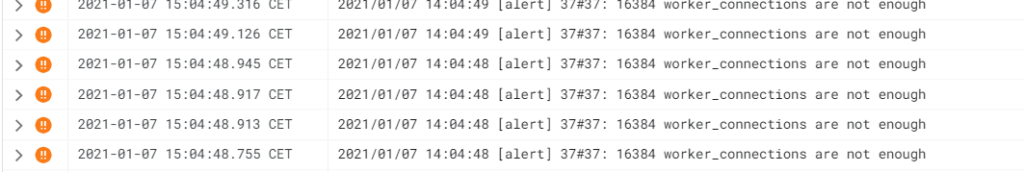

After thousands of these messages, ingress-nginx was killed by the kubelet because of the failing health checks. The reason for the failing checks was that nginx was out of worker_connections and at some time not answering requests anymore.

First, we tuned some settings in nginx and later did a deep dive in the udp settings for influxdb. In the end, nothing helped. It only delayed the kill of nginx for a few hours. RAM usage and the number of used worker connections were increasing and finally, the container was killed again.

I worked many years in the video streaming business and know that UDP is a “fire and forget” protocol. While with TCP you have handshakes and information about received and lost packages with UDP the sender is not aware if the send package received the destination.

In our setup, the sender is nginx (though it is more a forwarder) and the receiver is influxDB so why is nginx complaining about not getting a response from influxDB? We checked the nginx.conf again:

kubectl exec -it -n ingress-nginx ingress-nginx-controller-54648bd857-9j6fl -- cat /etc/nginx/nginx.conf

......

# UDP services

server {

preread_by_lua_block {

ngx.var.proxy_upstream_name="udp-influxdata-influxdb-9448";

}

listen 9448 udp;

proxy_responses 1;

proxy_timeout 600s;

proxy_pass upstream_balancer;

}

......

proxy_responses 1; is the interesting one here. The UDP input of influxdb will not give any response to nginx so this setting makes no sense and is the reason for nginx refusing new connections after used up all of them.

After setting proxy_responses 0; everything was running smooth and the nginx restarts were gone.